BioTech

Discover how our specialized AI solutions revolutionize biotech research and development through custom tokenization, enhanced data handling, and secure processing tailored specifically for biological sequences and scientific workflows.

Efficient Representation of Biological Sequences

Standard tokenisers used in general-purpose models are not designed for unique sequences like DNA, RNA, or proteins, where small changes (e.g., a single base mutation) can carry signi cant meaning.

Solution: A custom tokeniser, created at the pre-training stage, can treat speci c biological units—such as nucleotides (A, T, G, C) or amino acids—as individual tokens, preserving the biological structure and improving downstream predictions.

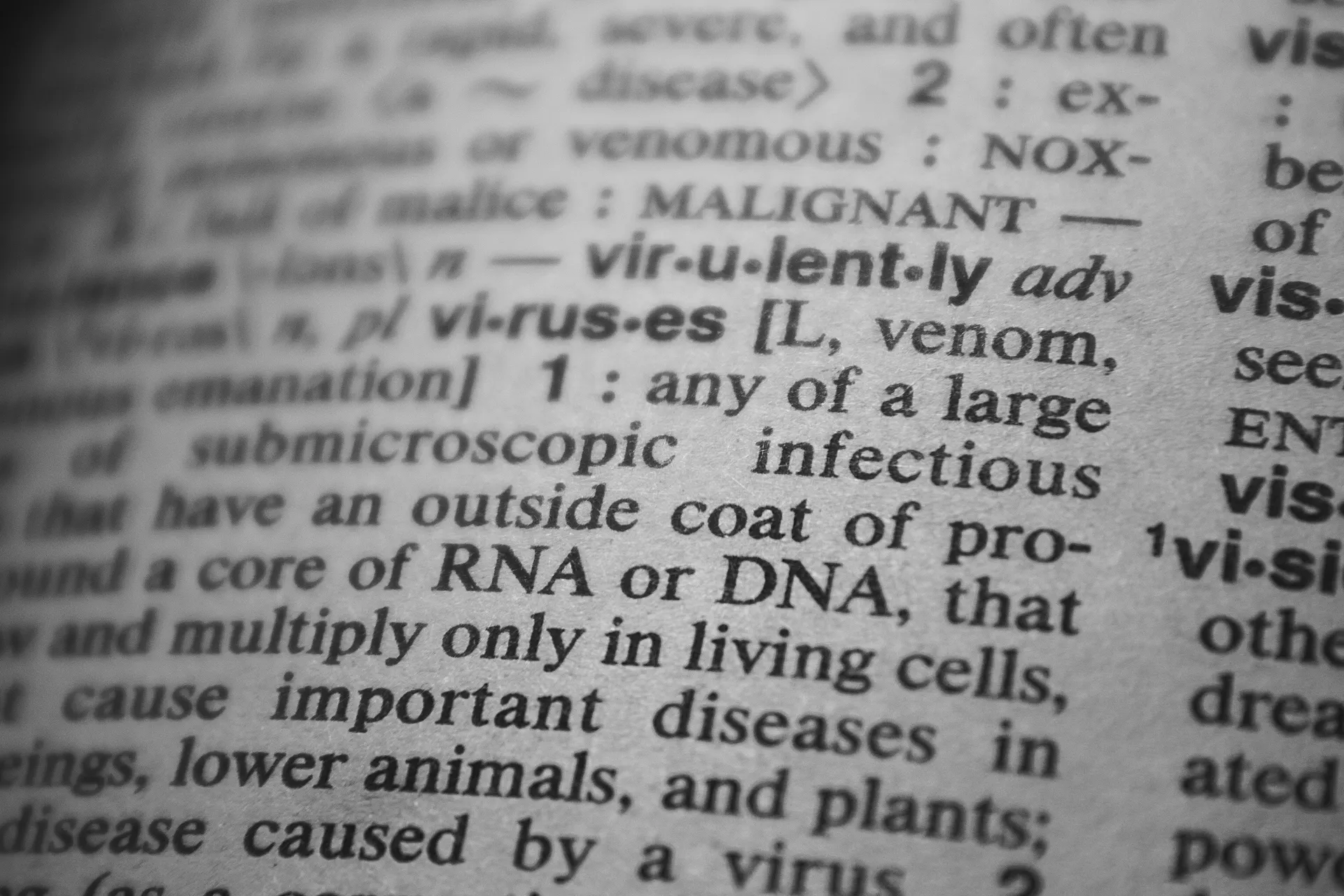

Handle Domain-Specific Vocabulary

BioTech involves specialised terms, abbreviations, and symbols (e.g., chemical names, protein sequences, or genetic codes) that general-purpose tokenisers often struggle with.

Solution: Custom tokenisers, created at the pre-training stage, can break down complex scienti c terms or symbols into meaningful units, ensuring the model captures their full context and meaning.

Improve Model Efficiency and Performance

BioTech data often includes repetitive sequences or highly structured text that can inflate token counts with standard tokenisers, leading to inefficient model processing.

Solution: Custom tokenisers, created at the pre-training stage, optimise the sequence length by grouping repetitive patterns into single tokens, reducing computational overhead and improving model focus.

Enhance Multimodal Integration

When working with multimodal data (e.g., integrating text, images, and biological sequences), consistent tokenisation is key for effective fusion.

Solution: Custom tokenisers, created at the pre-training stage, can harmonise tokenisation strategies across modalities, ensuring seamless integration of diverse data types in a single LLM framework.

Improved Generalisation for Biotech Tasks

General-purpose LLMs trained on diverse internet data struggle to generalise effectively for biotech-specific tasks.

Solution: Starting with a biotech-trained base model provides inherent domain expertise. This approach significantly improves fine-tuning for specific use cases, requiring less data and effort while achieving superior performance.

Safer for Sensitive Data

General-purpose LLMs trained on internet-scale datasets often lack transparency and control over the data sources used. This poses significant risks for biotech applications that handle sensitive and regulated information, such as patient records or proprietary research.

Solution: Training from scratch on high-quality, curated biotech datasets ensures full control and traceability over the training pipeline, enhancing data privacy and compliance with strict regulations like GDPR and HIPAA.