Software Development

Revolutionize your software development with our suite of specialized LLM solutions, from secure on-device coding assistants to unlimited-context models that handle complex codebases with ease.

Coding AI Assistant On-Device

By training a compact, efficient coding LLM from scratch, the assistant can run seamlessly on devices ranging from laptops to edge systems, all without demanding heavy computational resources. For businesses handling highly sensitive data, such as proprietary codebases, this ensures complete security by keeping all information on-device or within the network.

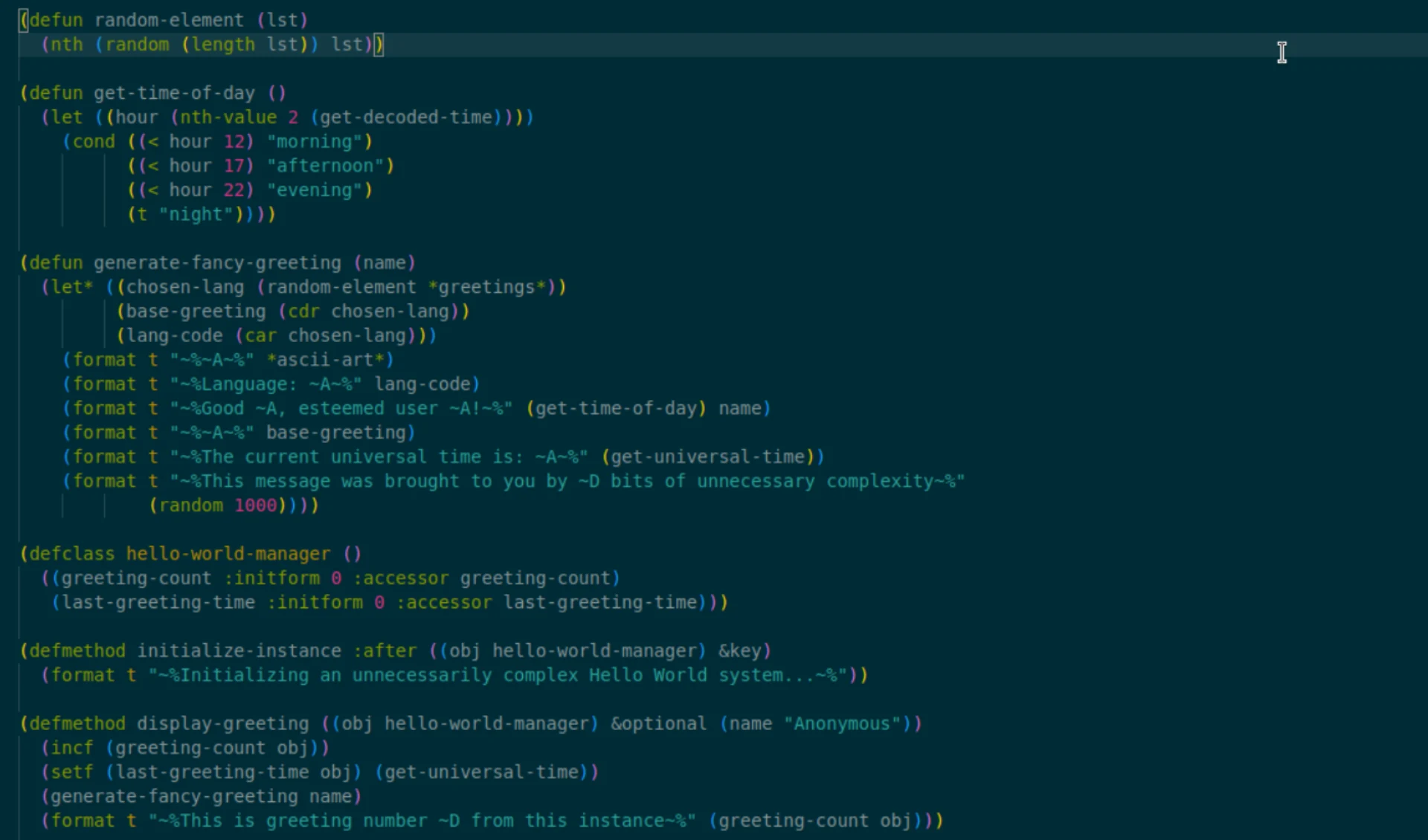

LLMs for Niche Programming Languages

Create a custom, compact LLM designed for rare programming languages like COBOL, Ada, Forth, Smalltalk, and Lisp. By pre-training your model on high- quality datasets selected from our platform, combined with synthetic data that you can generate with us, you'll ensure the model performs at its highest potential.

Low Latency LLM for Code Generation

Unlock the power of faster code generation by pre-training coding LLMs. Specialising in programming languages and code structures, they quickly identify patterns and context, delivering accurate code with minimal latency. Say goodbye to the complexity of off-the-shelf models and experience optimised, efficient performance tailored to your coding needs. Choose to pre-train LLMs for a seamless, faster coding experience today.

Unlimited Context in LLMs for Coding

Our platform offers the unique ability to choose from open architectures of existing open-source LLMs or leverage our in-house NLM family architecture, which support unlimited context windows. This feature is particularly valuable for coding, enabling your models to process larger codebases and contextually rich sequences without the usual limitations, leading to more accurate code generation, debugging, and overall higher performance in handling complex programming tasks.